The SCHAEFFER Dataset - Spectromorphogical Corpus of Human-annotated Audio with Electroacoustic Features For Experimental Research

Tid: Ti 2024-11-26 kl 15.00 - 16.00

Plats: Room 1E207, KMH Royal College of Music

Videolänk: Zoom

Språk: English

Medverkande: Maurizio Berta, KTH, PhD Candidate in the SMC Group

Abstract

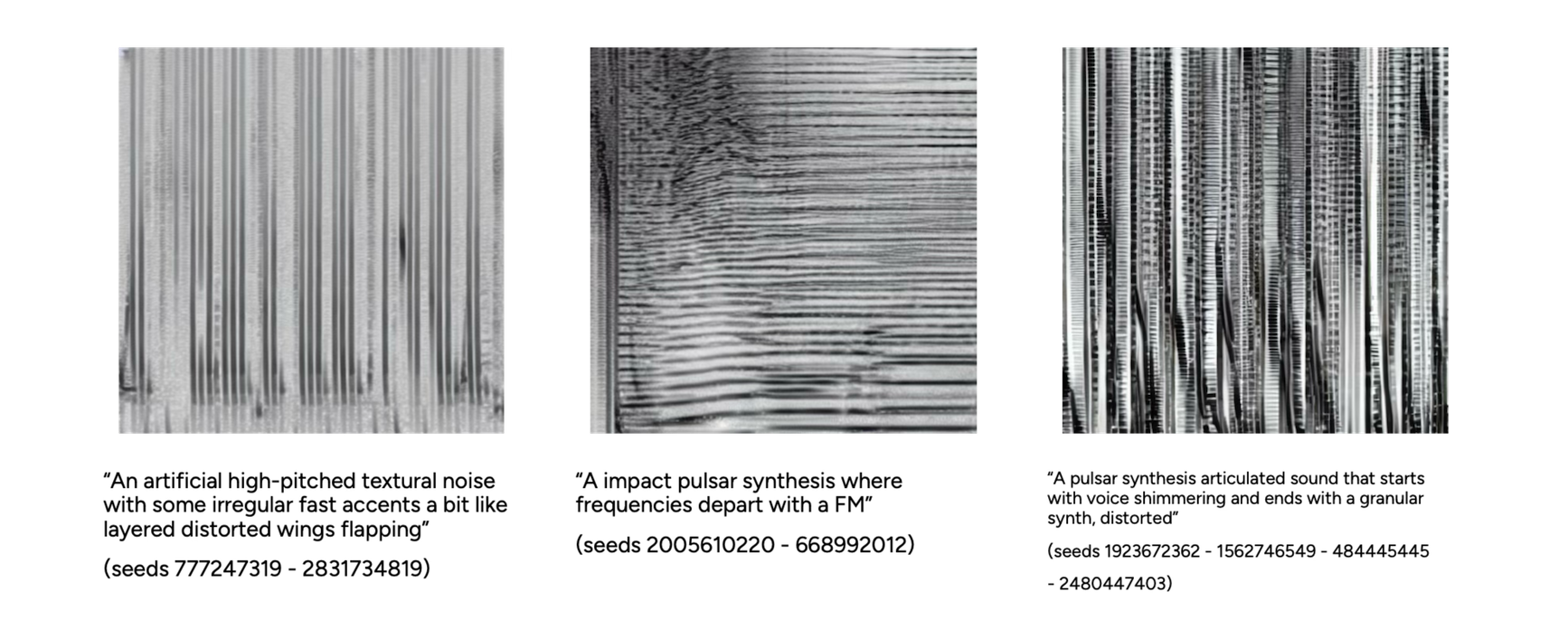

This seminar introduces the SCHAEFFER Dataset, a collection of spectromorphologically annotated sound objects. A project was developed to investigate the automatic generation and analysis of audio using Schaefferian’s type descriptions. The base assumption for this project is the absence of a dataset of this kind, thus making it impossible to utilize several machine learning algorithms on the tasks of experimental electroacoustic music. The data was collected and annotated in a crowd-sourced manner with the aid of students from the Conservatories of Turin, Genoa, Pesaro, and Bologna. Each sound object submitted was required to have a duration between 5 to 10 seconds and to be defined with a free-form description and a set of spectromorphological labels.

The files were all released under a CC-By license to ensure rightful attribution to the dataset’s contributors. The dataset was then tested on the tasks of text-to-audio generation and automatic analysis. The crowd-sourced process allowed us to collect 788 annotated sound objects. However, this size proved to be too small, resulting in the dataset not being representative of all the classes defined within itself. Despite this issue, it was still possible to train a classifier that performed well on some metrics. However, the text-to-audio generation was only functional in generating from typological descriptions, but not from morphological phrases. This collection marks the initial steps in building datasets of sound objects. However, more data is needed to achieve a coherent data representation.