Introduction to supercomputers

If you are new to supercomputers, here is a brief explanation about supercomputers and some of the main terminology.

What is a supercomputer?

- The most powerful computers used for science, technology and artificial intelligence (AI) computing are known as “supercomputers”and are optimised for performing floating point calculations. In contrast, powerful computers optimised for handling a large number of transactions efficiently and reliably, such as for database administration purposes, are referred to as “mainframes”.

- Today’s supercomputers are extremely large computer systems that are built out of many smaller computer processors, each of which is similar to the processor in a personal computer (PC) or laptop.

How supercomputers work

- All the processors in a supercomputer can work (doing computations) at the same time - this is known as parallel computing. By doing many calculations in parallel, a supercomputer can do things that require large amounts of computation much faster than a single-processor computer.

- Programs and code designed for single-processor computers need to be modified so the calculations done by the program code can be run in parallel to take advantage of the large number of processors in a supercomputer. This process of adapting a program or application to run on a supercomputer is sometimes referred to as “parallelising” or “porting” the code.

CPU vs GPU supercomputers

Initially supercomputers were built using central processing units (CPUs) and memory. Some time ago, CPUs consisted of one or several chips forming a single processing unit. Over time, CPU chips have evolved to consist of multiple processors. The individual processors in a CPU chip are now called cores.

CPUs were designed to handle a wide range of complex tasks very quickly but they are limited in the number of tasks they can perform in parallel. Meanwhile, graphics processing units (GPUs) were developed to make real-time graphics work faster (for example, for computer games). GPUs were designed as chips with many processors/cores so they could tackle large numbers of relatively simple tasks rapidly in parallel.

People gradually realised that the inherent parallelism of GPUs meant they could be used for supercomputers, especially as GPUs can perform certain types of parallel computations faster than CPUs. This has led to the development of supercomputer systems that now contain both CPUs and GPUs.

Parts of a supercomputer

Clusters, racks and blades

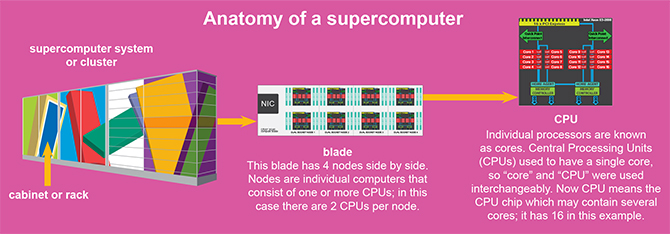

- Modern supercomputer systems are built as clusters. That means that they are built of many nodes, where each node is somewhat similar to a desktop workstation or personal computer.

- A supercomputer system or cluster is usually made up of many cabinets (also known as racks), which look like large cupboards standing side-by-side. Many supercomputers are so large that they take up several rows as there are too many cabinets to fit in a single row.

- Each cabinet or rack contains nodes, which are essentially stacked one above the other inside the cabinet, and then connected to each other via cables.

- Initially, clusters were built of nodes that were each a complete computer containing a motherboard, which held the CPU and some random-access memory (RAM) for storage, along with the power supply, cooling fans, and so forth, all mounted in a case similar to a desktop workstation.

- It was then realised that clusters could be made more economical and efficient by putting a fixed number of nodes in a single case (or enclosure) and having a common power supply and cooling fan for everything in that enclosure. The motherboards for each of the nodes in a single enclosure are often inserted into a type of circuit board, known as a backplane, which has a connector that supplies power and network connections to all the motherboards in the backplane. The individual motherboards were each referred to as blades.

- A further development made systems even more compact by building supercomputers with blades consisting of multiple nodes (instead of individual nodes). You can see photos of the blades being put into a Dardel cabinet after being removed during the installation process.

CPU and GPU nodes (plus GPU devices/GCDs)

- Each node is an individual computer that usually contains one or more CPUs (each with many cores) together with some random-access memory (RAM) for storage (as in the illustration above) on a single motherboard. Nodes without GPUs are called CPU nodes.

- GPU nodes are built by combining one or several CPUs with one or more GPUs and RAM. Note that the term “GPU” is often used to refer to a physical chip that contains either one or several GPU devices. The individual GPU devices are also called graphics compute dies (GCDs). When there are multiple GCDs on a single GPU chip, the individual GCDs can be used as separate GPUs by programmers using the system. In this case, the GPU devices may also be called “GPU”s. (Since “GPU” can mean a node or a chip or a GPU device, it is important to be aware which meaning is intended in different contexts!) For examples of how GPU nodes are structured, see the information about the GPU nodes in the LUMI supercomputer at docs.lumi-supercomputer.eu/hardware/lumig or the diagram of a GPU node in the Dardel system at PDC on this page www.pdc.kth.se/hpc-services/computing-systems/about-the-dardel-hpc-system-1.1053338 . As shown in the diagram, the Dardel GPU nodes contain four chips, each of which has two GCDs, thus giving Dardel’s GPU nodes a total of eight GCDs.