PhD position: AI guided multimodal/multichannel spatiotemporal imaging

The PhD position is on developing mathematical theory and algorithms for foundational models for image reconstruction in multichannel dynamic tomographic imaging. Specific emphasis will be on regularisation for reconstruction of a dynamic image from time series data obtained from dynamic PET/CT and dynamic photon-counting X-ray CT.

Background & motivation

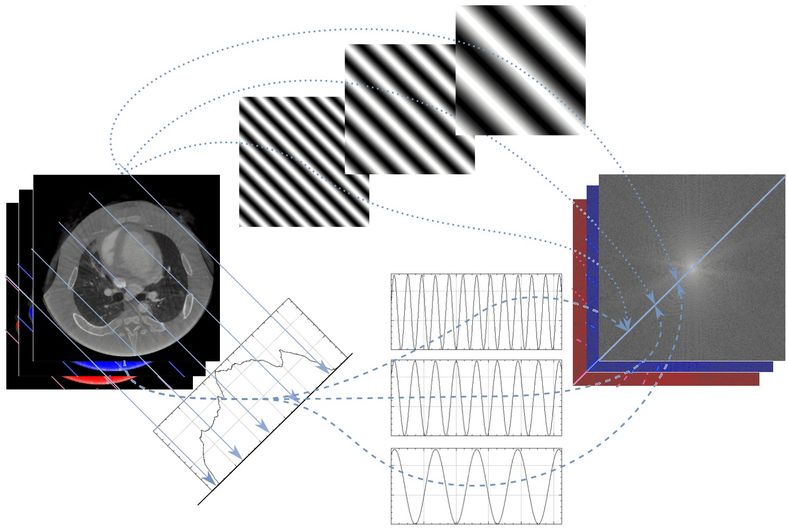

Tomographic imaging techniques, like X-ray computed tomography (CT) and positron emission tomography (PET), probe the object being imaged with a wave/particle from multiple known directions. The data represents indirect noisy observations of the internal structure (=image), so one needs to use techniques from inverse modelling (reverse engineering) to computationally recover the image from the data. Such reverse engineering is often ill-posed, meaning that methods that try to recover the image by fitting observed data only are unstable (small changes in data leads to large changes to the image). Hence, a key element is to stabilise the recovery by use an appropriate regularisation method. Finding an appropriate regularisation is based on using a priori information, and this becomes more challenging when data and images are multidimensional and dynamic.

Research topics

A particular research topic is to develop, train and evaluate physics informed mutlimodal neural network architectures for multichannel spatiotemporal image reconstruction. The idea is to efficiently integrate complementary information from the different channels in the data and image by physics informed couplings. One starting point is to explore transformer architectures that have physics informed cross attention. Another is architectures based on state space model architectures (SSM) that predict an output sequence from a continuous input sequence through learned state and output equations. A key aspect will be to handle mutliscale phenomena. Additionally, one can view dynamics as an additional (temporal) channel that is modelled with another physics informed deep neural network. Here we consider a sequence of deformations, each modelled with a foundational model for image registration. See the research program for more information.

Cross-Disciplinary Research Environment

The PhD position is part of a larger collaboration involving Mats Persson at the Physics of Medical Imaging division within the Department of Physics at KTH and Massimiliano Colarieti Tosti at the Division of Biomedical Engineering within the Department of Biomedical Engineering and Health Systems at KTH. They will also recruit one PhD student each that will be working in the project. This is especially the case when focus shifts towards scaling up the AI models so that they can be applied to dynamic PET/CT and spectral CT.

Link to apply

Use the link below to apply for this position, deadline is May 19, 2025.