Active perception

Despite the tremendous development in the area of robotics, there are many tasks that involve close interaction with humans and objects that robots are still not able to perform with the desired level of robustness and efficiency.

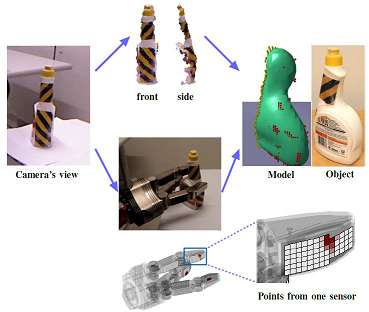

Visual sensing, both regular and RGBD cameras, are most commonly used for object, scene and activity understanding. The need for simultaneous object/action modeling has inspired our work for active, multisensory segmentation and object/action tracking. When humans interact with the scene/objects, spatiotemporal relationships between the objects and the actor can be exploited to enhance the joint understanding of both. For example, the posture of the human hand/arm can be informative of objects’ properties (open at the top) or states (full/empty). Similarly, known or extracted (pose, shape) object properties can enable the disambiguation of commonly under-constrained human posture. Our work in this area focuses therefore on sensors integration and proactive interaction with the environment to guide an informed exploration and learning process.

Projects

We are currently involved in the following projects:

Contact

For more information, please contact involved faculty members: