Sound in Human-Robot Interaction

We are very glad to invite you to the Sound and Music Interaction Seminar with Hannah Pelikan.

Time: Tue 2022-10-18 15.00

Location: Room 1440 "Henrik Eriksson", Osquars backe 2, floor 4

Video link: Zoom link

Language: English

Participating: Hannah Pelikan, Linköping University

Abstract

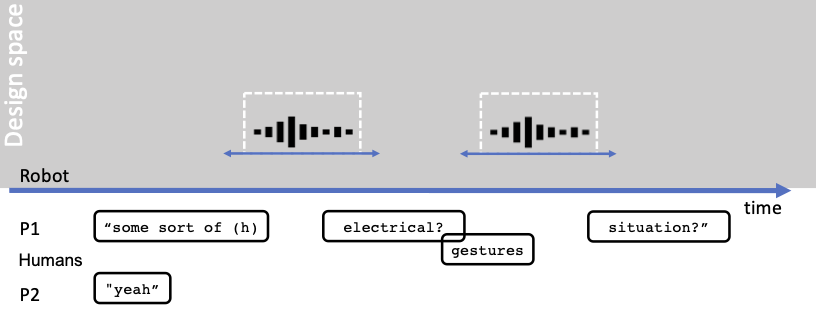

Non-lexical sounds such as ouch, wohoo, and tadaa are an important element of human-human communication, and can also be used by robots to communicate. From an interactional perspective, sound is interesting because it is at the margin of language: Non-lexical vocalizations are understandable for speakers intuitively and across languages and are often closely intertwined with bodily movement. In my talk I will discuss how robots can use sound to facilitate interaction with humans. Using detailed multimodal transcriptions of video snippets, I will demonstrate how the specific timing of a sound in a sequence can influence how it will be interpreted. Building on conversation analytic practices of working with video data, I will present a voice-over technique that grounds the design of robot sound in specific examples and facilitates testing of sound in actual interactional sequences.

Bio

Hannah Pelikan is a PhD candidate in language and culture at the Department of Culture and Society (IKOS) at Linköping University and recently was a visiting scholar in Information Science at Cornell University. She holds a MSc in Interaction Technology from University of Twente and a BSc in Cognitive Science from Osnabrück University. Her current research interests include understanding how humans interpret robot sound in everyday interaction and informing robot sound design by insights from ethnomethodology and conversation analysis. As part of the Non-Lexical Vocalizations project, funded by the Swedish Research Council, she translates insights on human practices for using non-lexical sound into her work on robots.