Giacomo attended the STACOM (Statistical Atlases and Computational Modelling of the Heart) workshop to present our paper titled: “Reducing the number of leads for ECG Imaging with Graph Neural Networks and meaningful latent space”. The work demonstrates a potential for removing a large number of leads (more than half!) during ECG Imaging.

This is joint work with Giacomo Verardo (KTH), Daniel F. Perez-Ramirez, (RISE/KTH), Samuel Bruchfeld (KI), Magnus Boman (KI), Marco Chiesa (KTH), Sabine Koch (KI), Gerald Q. Maguire Jr. (KTH), and Dejan Kostic (KTH).

The paper is available here, while the full abstract is below:

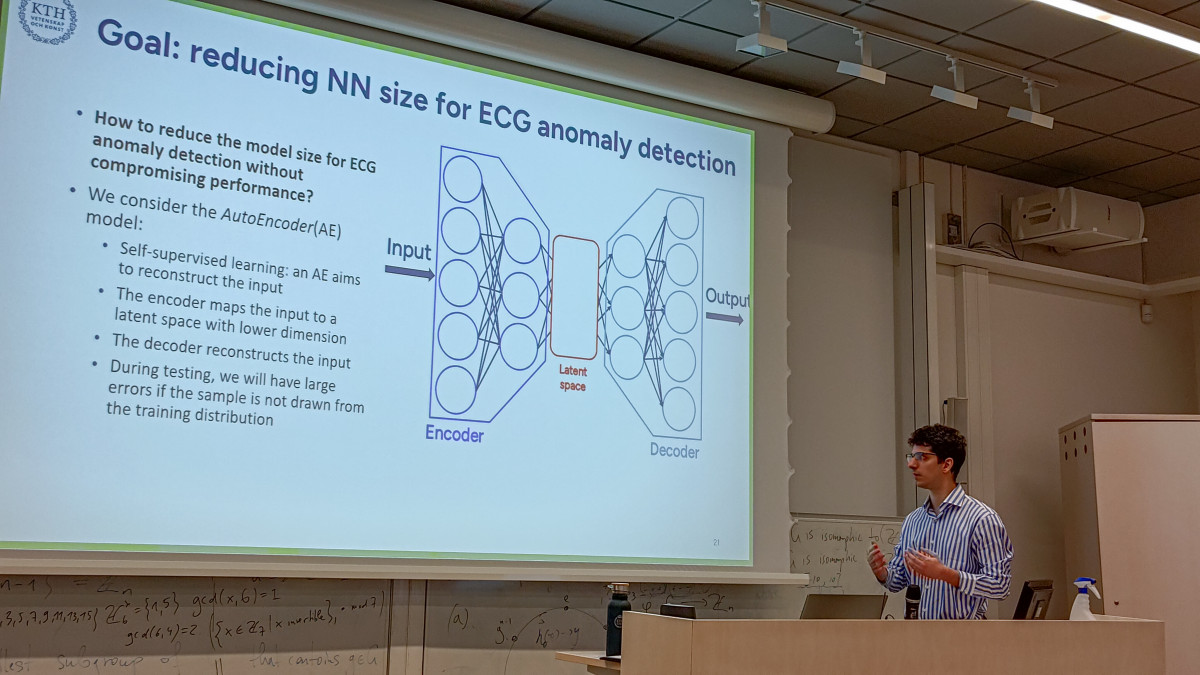

ECG Imaging (ECGI) is a technique for cardiac electrophysiology that allows reconstructing the electrical propagation through different parts of the heart using electrodes on the body surface. Although ECGI is non-invasive, it has not become clinically routine due to the large number of leads required to produce a fine-grained estimate of the cardiac activation map. Using fewer leads could make ECGI practical for clinical patient care. We propose to tackle the lead reduction problem by enhancing Neural Network (NN) models with Graph Neural Network (GNN)-enhanced gating. Our approach encodes the leads into a meaningful representation and then gates the latent space with a GNN. In our evaluation with a state-of-the-art dataset, we show that keeping only the most important leads does not increase the cardiac reconstruction and onset detection error. Despite dropping almost 140 leads out of 260, our model achieves the same performance as another NN baseline while reducing the number of leads. Our code is available at github.com/giacomoverardo/ecg-imaging.