Multimodal Machine Learning for Social Robots

Tid: On 2024-02-21 kl 16.00

Plats: Room 1440 "Henrik Eriksson", Osquars backe 2, floor 4

Videolänk: Zoom link

Språk: English

Medverkande: Angelica Lim, Ph.D. Assistant Professor, School of Computing Science, Simon Fraser University

Abstract

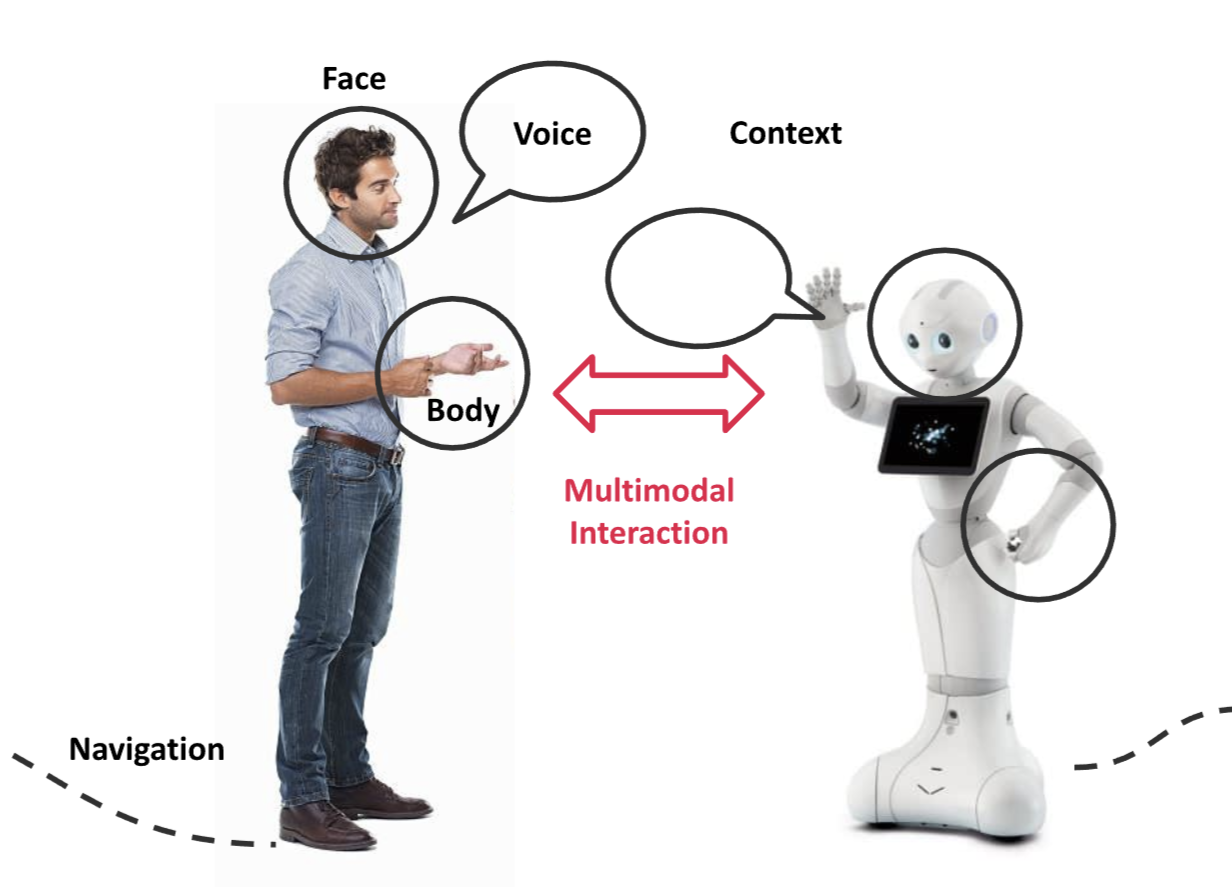

In this talk, I will discuss the challenges and opportunities in creating multimodal machine learning systems that can analyze, detect and generate non-verbal communication, including gestures, gaze, auditory signals, and facial expressions. Specifically, I will provide examples in how we might allow robots to analyze human social signals (including emotions, mental states, and attitudes) across cultures, as well as to recognize and generate expressions with diversity in mind.

Bio

Dr. Angelica Lim is the Director of the Rosie Lab , and an Assistant Professor in the School of Computing Science at Simon Fraser University. Previously, she led the Emotion and Expressivity teams for the Pepper humanoid robot at SoftBank Robotics. She received her B.Sc. in Computing Science (Artificial Intelligence Specialization) from SFU and a Ph.D. and Masters in Computer Science (Intelligence Science) from Kyoto University, Japan. She has been featured on the BBC, TEDx, hosted a TV documentary on robotics, and was recently featured in Forbes 20 Leading Women in AI.

Passionate about diversity, she is the Director of Invent the Future (AI4ALL) program for Canada, teaches CMPT 120: Introduction to Computing Science and Programming and has developed the SFU Computer Science Teaching Toolkit , with the goal of inspiring people of all backgrounds to pursue Computing Science. She also teaches Introduction to Software Engineering and Affective Computing .